Cable vs

At DockYard, we transitioned our backend development from Ruby and Rails to Elixir and Phoenix once it became clear that Phoenix better served our clients needs to take on the modern web. As we've seen, Phoenix is Not Rails, but we borrow some of their great ideas. We were also delighted to give back in the other direction when Rails announced that Rails 5.0 would be shipping with Action Cable – a feature that takes inspiration from Phoenix Channels.

At DockYard, we transitioned our backend development from Ruby and Rails to Elixir and Phoenix once it became clear that Phoenix better served our clients needs to take on the modern web. As we've seen, Phoenix is Not Rails, but we borrow some of their great ideas. We were also delighted to give back in the other direction when Rails announced that Rails 5.0 would be shipping with Action Cable – a feature that takes inspiration from Phoenix Channels.

Now that Rails 5.0 has been released, we have clients with existing Rails stacks asking if they should use Action Cable for their real-time features, or jump to Phoenix for more reliable and scalable applications. When planning architecture decisions, in any language or framework, you should always take measurements to prove out your assumptions and needs. To measure how Phoenix channels and Action Cable handle typical application work-flows, we created a chat application in both frameworks and stressed each through varying levels of workloads.

How the tests were designed

How the tests were designed

For our measurements, we used the tsung benchmarking client to open WebSocket connections to each application. We added XML configuration to send specific Phoenix and Rails protocol messages to open channels on the established connection and publish messages. For hardware, we used two Digital Ocean 16GB, 8-core instances for the client and server.

The work-flows for each tsung client connection were as follows:

- open a WebSocket connection to the server

- create a single channel subscription on the connection, to a chat room chosen at random

- Periodically send a message to the chat room, randomly once every 10s-60s to simulate messaging across members in the room

On the server, the channel code for Rails and Phoenix is quite simple:

On the server, the channel code for Rails and Phoenix is quite simple:

After establishing N numbers of rooms, with varying numbers of users per room, we measured each application's responsiveness. We tested performance by joining a random room from the browser and timing the broadcasts from our local browser to all members in the room. As we increased the numbers of users per room, we measured the change in broadcast latency. We recorded short clips of each application's behavior under different loads.

This simulates a "chat app", but the work-flow applies equally to a variety of applications; real-time updates to visitors reading articles, streaming feeds, collaborative editors, and so on. As we evaluate the results, we'll explore how the numbers relate to different kinds of applications.

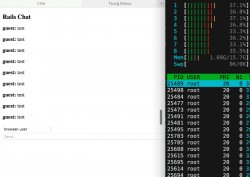

Rails Memory Leaks

After creating the Rails chat application, setting up redis, and deploying the application to our instance, we immediately observed a memory leak in the application that was visible just by refreshing a browser tab and watching the memory grow; to never be freed. The following recording shows this in action (sped up 10x):

We searched recently reported bugs around this area, and found an issue related to Action Cable failing to call socket.close when cleaning up connections. This patch has been applied to the 5-0-stable branch, so we updated the app to the unreleased branch and re-ran the tests. The memory leak persisted.

We searched recently reported bugs around this area, and found an issue related to Action Cable failing to call socket.close when cleaning up connections. This patch has been applied to the 5-0-stable branch, so we updated the app to the unreleased branch and re-ran the tests. The memory leak persisted.

We haven't yet isolated the source of the leak, but given the simplicity of the channel code, it must be within the Action Cable stack. This leak is particularly concerning since Rails 5.0 has been released for some time now and the 5-0-stable branch itself has unreleased memory leak patches going back greater than 30 days.

Scalability

We set the memory leak issue aside and proceeded with our tests for the following scenarios:

- Max numbers of rooms supported by a single server, with 50 users per room

- Max numbers of rooms supported by a single server, with 200 users per room

Note: For Phoenix, for every scenario we maxed the client server's ability to open more WebSocket connections, giving us 55, 000 users to simulate for our tests. Browser -> Server latency should also be considered when evaluating broadcast latency in these tests.

50 users per room

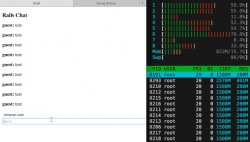

Rails: 50 rooms, 2500 users:

Responsiveness was speedy at 50 rooms, so we upped the room count to 75, giving us 3750 users.

Rails: 75 rooms, 3750 users:

Here, we can see Action Cable falling behind on availability when broadcasting, with messages taking an average of 8s to be broadcast to all 50 users for the given room. For most applications, this level of latency is not acceptable, so the level of performance for maxinum rooms on this server would be somewhere between 50 and 75 rooms, given 50 users per room.

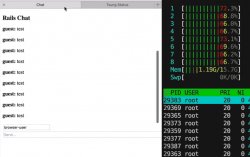

Phoenix: 1100 rooms, 55, 000 users (maxed 55, 000 client connections)

We can see that Phoenix responds on average in 0.25s, and only is maxed at 1100 rooms because of the 55, 000 client limit on the tsung box.

|

Wilson Electronics 6 dB 800 MHz Tap with 1.5 dB Pass Thru with N Female Connectors Wireless (Wilson Electronics)

|

|

AmerTac - Zenith VQ3100NEB Premium RG6 Quad Shield Coaxial No Ends Cable 100 Feet Speakers (AmerTac - Zenith)

|